Ghostbusting Timeshift+BTRFS

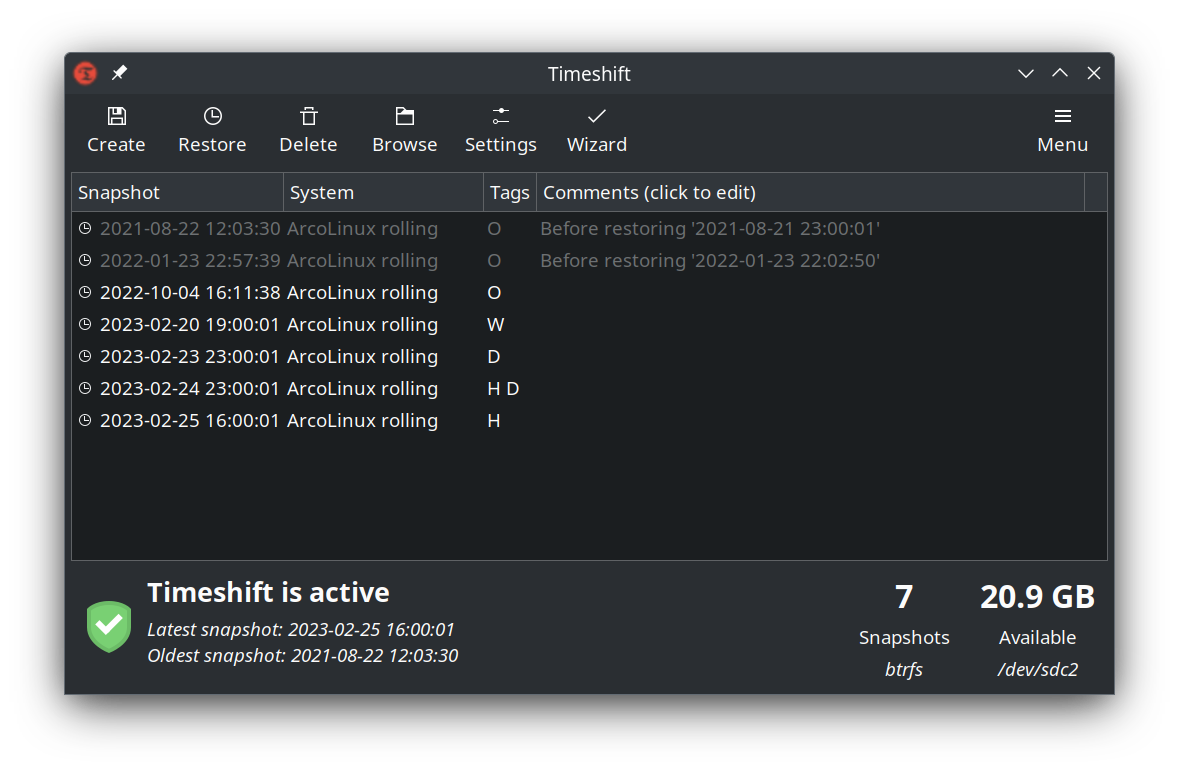

Today, before doing pacman -Syu, I opened Timeshift to create a snapshot, just in case.

I noticed that my “ghost snapshots” from 2021 and 2022 are still there, after all this time. I already marked all of them for deletion in Timeshift (thus they’re colored gray), but that didn’t have any effect. When I tried Right-Click - Delete again, an error popped up, then quickly went away on its own.

I smell something fishy, so let’s investigate.

[16:04:13] page: 1

[16:04:13] DeleteBox: delete_snapshots()

[16:04:13] Main: delete_begin()

[16:04:13] delete_begin(): thread created

[16:04:13] Main: delete_begin(): exit

[16:04:13] delete_thread()

[16:04:13] ------------------------------------------------------------------------------

[16:04:13] Removing snapshot: 2022-01-23_22-57-39

[16:04:13] Deleting subvolume: @ (Id:514)

[16:04:13] btrfs subvolume delete '/run/timeshift/3652/backup/timeshift-btrfs/snapshots/2022-01-23_22-57-39/@'

[16:04:14] E: ERROR: Could not destroy subvolume/snapshot: Directory not empty

[16:04:14] E: Failed to delete snapshot subvolume: '/run/timeshift/3652/backup/timeshift-btrfs/snapshots/2022-01-23_22-57-39/@'

[16:04:14] E: Failed to remove snapshot: 2022-01-23_22-57-39

[16:04:14] ------------------------------------------------------------------------------

This was in the logs. Seems like something is really broken.

BTRFS subvolume

Subvolume is a BTRFS feature that Timeshift uses to create so-called snapshots.

From the official docs (simplified):

A BTRFS subvolume is a part of filesystem with its own independent file/directory hierarchy. A snapshot is also subvolume, but with a given initial content of the original subvolume.

A subvolume looks like a normal directory, with some additional operations described below. Subvolumes can be renamed or moved, nesting subvolumes is not restricted but has some implications regarding snapshotting.

What should be mentioned early is that a snapshotting is not recursive, so a subvolume or a snapshot is effectively a barrier and no files in the nested appear in the snapshot.

If you’ve played with btrfs-subvolume(8) commands before, or have set up your own BTRFS hierarchy, you’d be very familiar with this.

First, let’s grab a list of all the subvolumes:

sudo btrfs subvolume list /

ID 256 gen 482600 top level 5 path timeshift-btrfs/snapshots/2021-08-22_12-03-30/@

ID 269 gen 138 top level 256 path timeshift-btrfs/snapshots/2021-08-22_12-03-30/@/var/lib/docker/btrfs/subvolumes/d7f6fbf20db0c621054bc98e94431d8b0f360f529d9096ea4ce01fc7333945fb

ID 270 gen 139 top level 256 path timeshift-btrfs/snapshots/2021-08-22_12-03-30/@/var/lib/docker/btrfs/subvolumes/744814a85589ad7ed33a8f421eb62d513b91035cf16f3e57ddd8f4feb79e5a37

ID 271 gen 140 top level 256 path timeshift-btrfs/snapshots/2021-08-22_12-03-30/@/var/lib/docker/btrfs/subvolumes/15eafe1ab0f6f708a53d0a4a122a89a9be0948cb473f439e626fcab6545b04d1

ID 272 gen 141 top level 256 path timeshift-btrfs/snapshots/2021-08-22_12-03-30/@/var/lib/docker/btrfs/subvolumes/1f3b0379f71a6b132d57b4c2a0d2b9608984c2d8b6b05163c0846f66d9d56530

ID 273 gen 142 top level 256 path timeshift-btrfs/snapshots/2021-08-22_12-03-30/@/var/lib/docker/btrfs/subvolumes/e092351c61d078908f6e58ee1a037267245bbf2d84b7074409f80dbab9779e5b

ID 274 gen 143 top level 256 path timeshift-btrfs/snapshots/2021-08-22_12-03-30/@/var/lib/docker/btrfs/subvolumes/73007feda28d68add7e60e4957365a0ab8e8fcf10dbc3df764ae20d0f89a640d

ID 275 gen 144 top level 256 path timeshift-btrfs/snapshots/2021-08-22_12-03-30/@/var/lib/docker/btrfs/subvolumes/a5d02af33337f1df380521a173e867b4244bff8063521301ad1212854a10269b

ID 276 gen 145 top level 256 path timeshift-btrfs/snapshots/2021-08-22_12-03-30/@/var/lib/docker/btrfs/subvolumes/8a4625113aa4947237ccbaa48975d7a598c332e7f9fbd53b8deac09c24c408ca

ID 277 gen 146 top level 256 path timeshift-btrfs/snapshots/2021-08-22_12-03-30/@/var/lib/docker/btrfs/subvolumes/8cd036c22ec685a93a6b711b9046f894912c6d9abebbab3b1d502a473227c66a-init

ID 278 gen 12373 top level 256 path timeshift-btrfs/snapshots/2021-08-22_12-03-30/@/var/lib/docker/btrfs/subvolumes/8cd036c22ec685a93a6b711b9046f894912c6d9abebbab3b1d502a473227c66a

ID 257 gen 484362 top level 5 path @home

ID 258 gen 484276 top level 5 path @cache

ID 259 gen 484362 top level 5 path @log

ID 2902 gen 484362 top level 5 path @

ID 3153 gen 140430 top level 257 path @home/skyfalls/.local/share/docker/btrfs/subvolumes/38f0f1df5530ed5eabd7414799383512b53b89938488775899cefe653e95e165

...300 lines of docker volumes and other unrelated stuff

We can see the 2021-08-22_12-03-30 snapshot in Timeshift actually consists of multiple other subvolumes.

So somehow, Timeshift managed to perform a full recursive snapshot, then deleted the default/primary nested subvolumes (@home, @cache, @log as shown), leaving all docker volumes behind (which are also BTRFS subvolumes)?

Well, one way to find out.

Manual repair

sudo btrfs subvolume delete /run/timeshift/6301/backup/timeshift-btrfs/snapshots/2021-08-22_12-03-30/@/var/lib/docker/btrfs/subvolumes/d7f6fbf20db0c621054bc98e94431d8b0f360f529d9096ea4ce01fc7333945fb

Delete subvolume (no-commit): '/run/timeshift/6301/backup/timeshift-btrfs/snapshots/2021-08-22_12-03-30/@/var/lib/docker/btrfs/subvolumes/d7f6fbf20db0c621054bc98e94431d8b0f360f529d9096ea4ce01fc7333945fb

Repeat this for as many times as necessary. Because this is a dangerous operation, I wouldn’t recommend scripting it. However, copying it into a text editor and turn that into shell commands should be fine.

Note

- The number

6301here will change everytime you open Timeshift. - The whole folder is only mounted when Timeshift is running (

@is the real root subvolume for the BTRFS filesystem, but it’s not directly available to the OS). - Snapshots will always be in

/run/timeshift/%d/backup/timeshift-btrfs. Every snapshot will be mounted in./snapshots, and also in./snapshots-{boot,daily,hourly,monthly,weekly}.

And lastly, delete the root subvolume:

sudo btrfs subvolume delete /run/timeshift/6301/backup/timeshift-btrfs/snapshots/2021-08-22_12-03-30/@

Also remove the whole snapshot folder, this is where Timeshift stores metadata for the snapshots.

Check ./info.json for more details.

pwd

/run/timeshift/6301/backup/timeshift-btrfs/snapshots/2021-08-22_12-03-30

ls -lah

total 4.0K

drwxr-xr-x 1 root root 30 Feb 25 16:29 .

drwxr-xr-x 1 root root 266 Feb 25 16:00 ..

-rw-r--r-- 1 root root 0 Feb 25 16:24 delete

-rw-r--r-- 1 root root 444 Sep 22 2021 info.json

sudo rm -r 2022-01-23_22-57-39

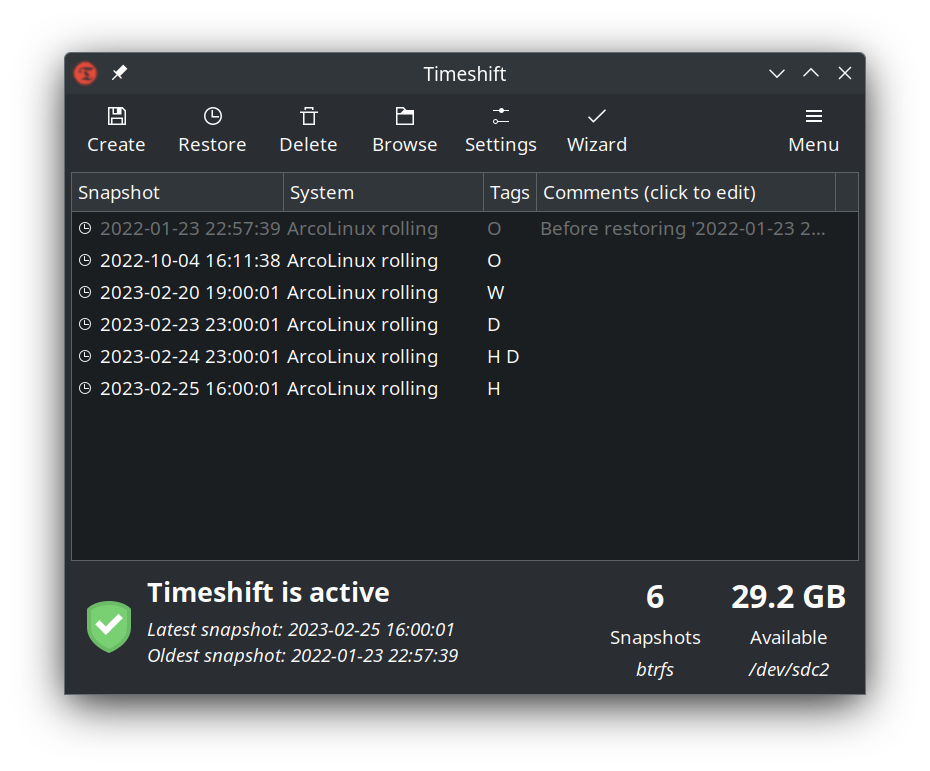

After restarting Timeshift, we can see that the ghost snapshot is gone, and the space occupied by it has been reclaimed.

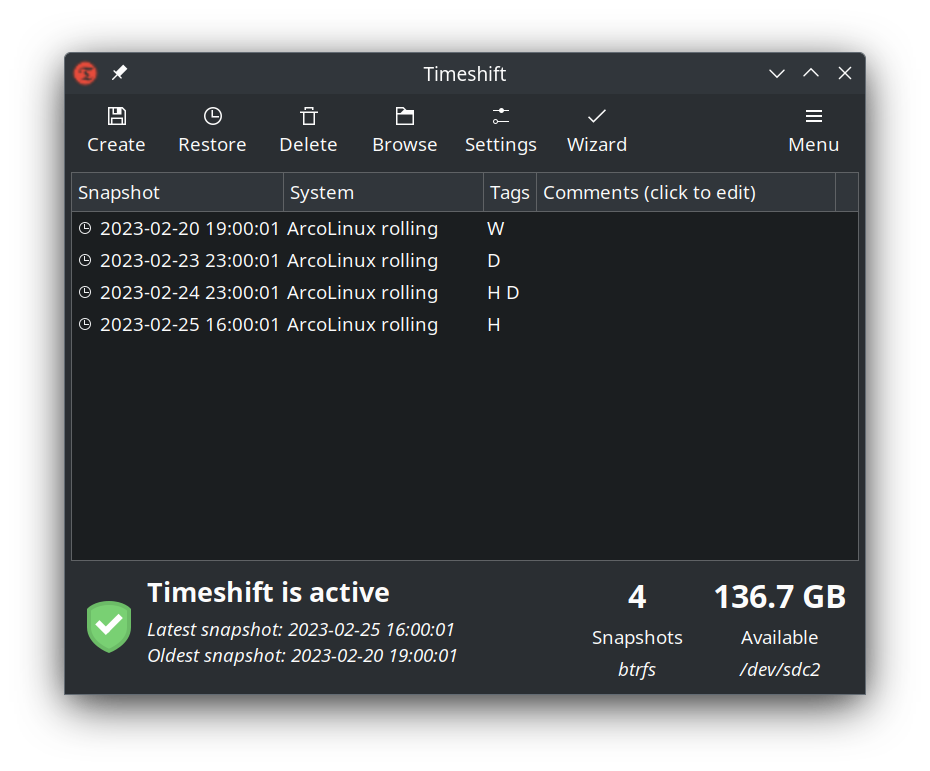

I then deleted the other 2 old snapshots.

After tremendous amount of lag lasting 5 minutes (btrfs-cleaner working up), I came back to 100GB of freed storage.

Guess that’s how you maintain a healthy level of free disk space.

You occupy x amount of space with something, and delete that when you need more space.

Awesome stuff.